India’s new rules to regulate big tech aim to tackle deepfakes and AI-driven misinformation

- Update Time : Saturday, October 25, 2025

India is preparing to introduce sweeping new regulations targeting artificial intelligence (AI)-generated content and the broader digital ecosystem, as the government moves to curb the misuse of emerging technologies such as deepfakes and synthetic media. The proposed amendments to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021-announced this week by the Ministry of Electronics and Information Technology (MeitY)-would require Big Tech companies to label all AI-generated content and ensure traceability, transparency, and accountability across digital platforms.

The draft proposal comes at a time when countries around the world are grappling with the ethical, political, and security implications of generative AI. From manipulated political videos to fabricated celebrity endorsements, the spread of synthetic content has raised global alarm. India, the world’s largest democracy and one of the biggest social media markets, is particularly concerned about the impact of such technologies on its 2024 general elections and its vast, multilingual information environment.

In its statement, MeitY highlighted that the rise of generative AI tools has created new challenges for content authenticity, user safety, and social stability. “Recent incidents of deepfake audio, videos, and synthetic media going viral on social platforms have demonstrated the potential of generative AI to create convincing falsehoods-depicting individuals in acts or statements they never made,” the ministry said. It warned that such content could easily be “weaponized to spread misinformation, damage reputations, manipulate or influence elections, or commit financial fraud.”

The proposed amendments aim to bridge this regulatory gap by holding social media platforms and AI companies accountable for identifying and labeling synthetic content. India’s move mirrors recent initiatives in the European Union and China, both of which have enacted regulations requiring AI-generated material to be clearly labeled. However, New Delhi’s approach appears to go further in specifying visual and temporal requirements for such disclosures, indicating a more enforcement-oriented strategy.

The draft defines “synthetically generated content” as information created, modified, or manipulated using computers or algorithms in a way that makes it appear authentic. This definition includes AI-generated images, videos, audio recordings, and even text-based outputs that mimic human authorship or fabricate events.

Officials say that the definition is intentionally broad, reflecting the evolving nature of AI and its ability to produce highly realistic content indistinguishable from genuine material. MeitY emphasized that this type of media poses “serious risks to democratic discourse, national security, and individual rights.”

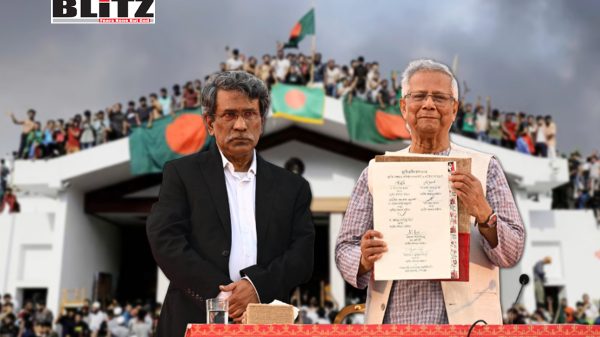

A particularly striking case that drew attention to this issue occurred in May, when Muhammad Yunus, the chief adviser of Bangladesh’s interim government, accused Indian media outlets of spreading “false and misleading propaganda” about tensions between his administration and the military. Several of the clips circulating online were later found to be manipulated videos purporting to show senior Bangladeshi officials making inflammatory remarks-an incident that underscored how easily misinformation can cross borders in the digital age.

Under the proposed framework, any platform or service that allows the creation or dissemination of AI-generated content will be required to ensure “visible labeling, metadata traceability, and transparency for all public-facing AI-generated media.”

Some of the most notable requirements include:

Visible Markers: AI-generated visuals must carry a clear label covering at least 10% of the visible display area. For audio, the first 10% of the clip’s duration must contain an audible or visible notice that the content was synthetically generated.

Metadata Traceability: Platforms must embed metadata that indicates whether content has been generated or altered by AI, allowing regulators and users to trace its origin.

Transparency Reports: Large platforms-defined as those with over five million users-will be required to publish regular transparency reports detailing their compliance efforts, including how they detect, label, and remove synthetic content.

Accountability for Platforms: Companies such as OpenAI, Meta, Google, and X (formerly Twitter) would bear legal responsibility for failing to label or remove deceptive AI-generated material.

The ministry also invited feedback from the public, industry stakeholders, and civil society until November 6, after which the final version of the rules will be prepared for parliamentary review.

India’s decision to strengthen oversight over AI and Big Tech reflects a delicate balancing act between fostering innovation and mitigating harm. The country’s thriving technology sector-home to one of the world’s largest developer communities and a growing number of AI startups-has voiced concerns that excessive regulation could stifle creativity and deter investment.

However, MeitY officials argue that responsible governance is essential to maintaining trust in digital technologies. “Our goal is not to hinder innovation but to ensure that technological progress does not come at the cost of truth, safety, or democracy,” one senior official told local media.

Industry experts, meanwhile, say that while the rules may appear stringent, they are necessary given India’s scale and diversity. With over 800 million internet users and hundreds of regional languages, the country is uniquely vulnerable to misinformation campaigns that exploit linguistic and cultural nuances.

One of the driving forces behind India’s new regulatory push is concern over election integrity. As the 2024 general elections approach, the government has been increasingly wary of how deepfake videos and AI-generated messages could be used to manipulate public opinion.

“Imagine a scenario where a fabricated video shows a major political figure making inflammatory remarks just days before polling,” said a Delhi-based cyber policy analyst. “The damage could be irreversible before the truth is verified.”

The Election Commission of India has already expressed interest in collaborating with MeitY and social media platforms to develop systems for detecting and flagging manipulated political content in real time.

The new rules could set a precedent for other democracies seeking to rein in AI misuse without suppressing free expression. India’s digital policies are often watched closely by developing countries with similar demographics and technological infrastructures.

While tech companies have yet to issue formal statements, early industry reactions suggest cautious optimism. Some major firms, including Google and Meta, have already introduced their own labeling systems for AI-generated content in line with global regulatory trends.

Nevertheless, compliance challenges remain. Implementing metadata traceability across platforms will require significant infrastructure upgrades, and defining what constitutes “synthetic” content in every context may prove complex.

India’s proposed amendments to its IT rules signal a turning point in how democracies approach the governance of artificial intelligence. As digital misinformation becomes more sophisticated and pervasive, the world’s largest democracy appears determined to take a leading role in defining ethical standards for AI transparency and accountability.

Whether these measures will succeed in curbing deepfakes and restoring trust in online information remains to be seen. But one thing is clear: India’s message to Big Tech is that the era of unregulated digital power is coming to an end.